Eclipse openDuT

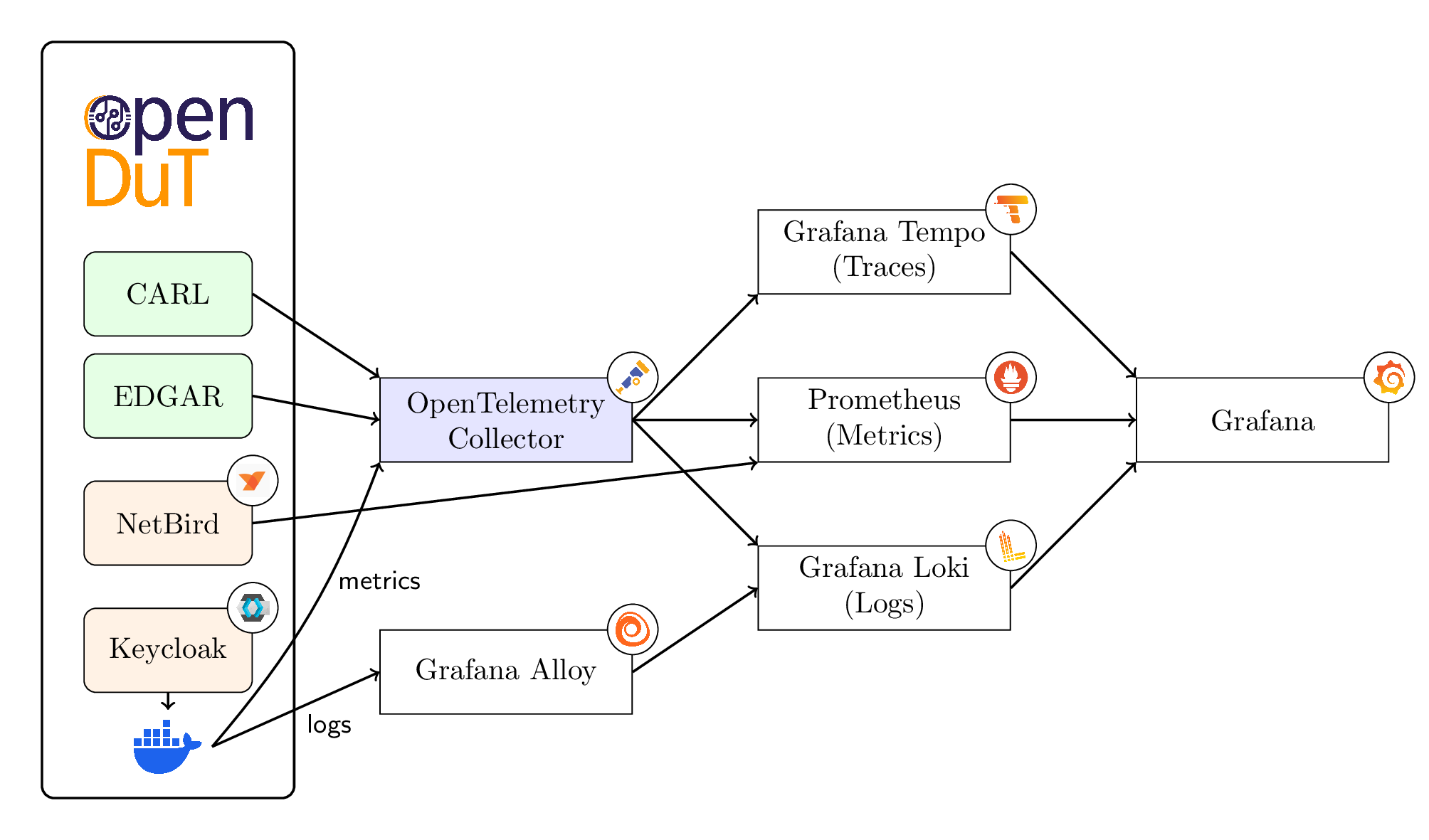

Eclipse openDuT provides an open framework to automate the testing and validation process for automotive software and applications in a reliable, repeatable and observable way. Eclipse openDuT is hardware-agnostic with respect to the execution environment and accompanies different hardware interfaces and standards regarding the usability of the framework. Thereby, it is supporting both on-premise installations and hosting in a cloud infrastructure. Eclipse openDuT considers an eventually distributed network of real (HIL) and virtual devices (SIL) under test. Eclipse openDuT reflects hardware capabilities and constraints along with the chosen test method. Test cases are not limited to a specific domain, but it especially realizes functional and explorative security tests.

User Manual

Learn how to use openDuT and its individual components.

User Manual for CARL

CARL provides the backend service for openDuT. He manages information about all the DUTs and coordinates how they are put configured.

CARL also serves a web frontend, called LEA, for this purpose.

Setup of CARL

Currently, our setup is automated via Docker Compose.

If you want to use CARL and its components on a separate machine, i.e. a Raspberry PI or any other machine, this guide will show all necessary steps, to get CARL up and running.

-

Install Git, if not already installed and checkout the openDuT repository:

git clone https://github.com/eclipse-opendut/opendut.git -

Install Docker and Docker Compose v2, e.g. on Debian-based operating systems:

sudo apt install docker.io docker-compose-v2 -

Optional: Change the docker image location CARL should be pulled from in

.ci/deploy/localenv/docker-compose.yml. By default, CARL is pulled fromghcr.io. -

Set

/etc/hostsfile: Add the following lines to the/etc/hostsfile on the host system to access the services from the local network. This assumes that the system, where OpenDuT was deployed, has the IP address192.168.56.10192.168.56.10 opendut.local 192.168.56.10 carl.opendut.local 192.168.56.10 auth.opendut.local 192.168.56.10 netbird-api.opendut.local 192.168.56.10 netbird-relay.opendut.local 192.168.56.10 signal.opendut.local 192.168.56.10 opentelemetry.opendut.local 192.168.56.10 monitoring.opendut.local 192.168.56.10 nginx-webdav.opendut.local -

Start the local test environment using Docker Compose.

# configure project path export OPENDUT_REPO_ROOT=$(git rev-parse --show-toplevel) # start provisioning and create .env file docker compose --file ${OPENDUT_REPO_ROOT:-.}/.ci/deploy/localenv/docker-compose.yml --env-file ${OPENDUT_REPO_ROOT:-.}/.ci/deploy/localenv/.env.development up --build provision-secrets # delete old secrets, if they exist, ensuring they are not copied to a subdirectory rm -rf ${OPENDUT_REPO_ROOT:-.}/.ci/deploy/localenv/data/secrets/ # copy the created secrets to the host, ensuring they are readable for the current user docker cp opendut-provision-secrets:/provision/ ${OPENDUT_REPO_ROOT:-.}/.ci/deploy/localenv/data/secrets/ # start the environment docker compose --file ${OPENDUT_REPO_ROOT:-.}/.ci/deploy/localenv/docker-compose.yml --env-file .ci/deploy/localenv/.env.development --env-file ${OPENDUT_REPO_ROOT:-.}/.ci/deploy/localenv/data/secrets/.env up --detach --buildIn this step secrets are going to be created and all containers are getting started.

The secrets which were created during the firstdocker composecommand can be found in.ci/deploy/localenv/data/secrets/.env. Domain names are configured in environment file.env.development.

If everything worked and is up and running, you can follow the EDGAR Setup Guide.

Shutdown the environment

- Stop the local test environment using docker compose.

docker compose --file ${OPENDUT_REPO_ROOT:-.}/.ci/deploy/localenv/docker-compose.yml down

- Destroy the local test environment using docker compose.

docker compose --file ${OPENDUT_REPO_ROOT:-.}/.ci/deploy/localenv/docker-compose.yml down --volumes

Configuration

#TODO shorten

- You can configure the log level of CARL via the environment variable

OPENDUT_LOG.

For example, to only show INFO logging and above, set it asOPENDUT_LOG=info.

For more fine-grained control, see the documentation here: https://docs.rs/tracing-subscriber/latest/tracing_subscriber/filter/struct.EnvFilter.html#directives - The general configuration of CARL can be set via environment variables or by manually creating a configuration file under

/etc/opendut/carl.toml.

The environment variables use the TOML keys in the configuration file, joined by underscores and in capital letters. For example, to configure thenetwork.bind.hostuse the environment variableNETWORK_BIND_HOST.

The possible configuration values and their defaults can be seen here:

[network]

bind.host = "0.0.0.0"

bind.port = 8080

remote.host = "localhost"

remote.port = 8080

[network.tls]

enabled = true

certificate = "/etc/opendut/tls/carl.pem"

key = "/etc/opendut/tls/carl.key"

ca = "/etc/opendut/tls/ca.pem"

# use client certificate when connecting to other services (identity provider, opentelemetry collector, in the future also NetBird)

[network.tls.client.auth] #mTLS

enabled = false

certificate = "/etc/opendut/tls/client-auth.pem"

key = "/etc/opendut/tls/client-auth.key"

# validate certificates of connecting clients with the given certificate authority

[network.tls.server.auth] #mTLS

enabled = false

ca = "/etc/opendut/tls/ca.pem"

[network.oidc]

enabled = false

[network.oidc.client]

id = "tbd"

secret = "tbd"

# issuer url that CARL uses

issuer.url = "https://auth.opendut.local/realms/opendut/"

# issuer url that CARL tells the clients to use (required in test environment)

issuer.remote.url = "https://auth.opendut.local/realms/opendut/"

issuer.admin.url = "https://auth.opendut.local/admin/realms/opendut/"

scopes = ""

[network.oidc.client.tls]

ca = ""

[network.oidc.client.tls.client.auth] #mTLS

enabled = "unset" # use default defined in network.tls.client.auth, otherwise set this to 'true' or 'false'

certificate = ""

key = ""

[network.oidc.lea]

client.id = "opendut-lea-client"

issuer.url = "https://auth.opendut.local/realms/opendut/"

scopes = "openid,profile,email"

[persistence]

enabled = false

[persistence.database]

file = ""

[peer]

disconnect.timeout.ms = 30000

can.server_port_range_start = 10000

can.server_port_range_end = 20000

ethernet.bridge.name.default = "br-opendut"

[serve]

ui.directory = "opendut-lea/"

[vpn]

enabled = true

kind = ""

[vpn.netbird]

url = ""

ca = ""

auth.type = "oauth-create-api-token"

auth.secret = ""

# only for OIDC

auth.issuer = ""

auth.username = "netbird"

auth.password = ""

auth.scopes = ""

# retry requests to the NetBird API

timeout.ms = 10000

retries = 5

setup.key.expiration.ms = 86400000

[logging.pipe]

enabled = true

stream = "stdout"

[opentelemetry]

enabled = false

collector.endpoint = ""

service.name = "opendut-carl"

[opentelemetry.tls]

ca = ""

[opentelemetry.tls.client.auth] #mTLS

enabled = "unset" # use default defined in network.tls.client.auth, otherwise set this to 'true' or 'false'

certificate = ""

key = ""

[opentelemetry.metrics]

interval.ms = 60000

cpu.collection.interval.ms = 5000

EDGAR

EDGAR hooks your DuT up to openDuT. It is a program to be installed on a Linux host, which is placed next to your ECU. A single-board computer, like a Raspberry Pi, is good enough for this purpose.

Within openDuT, EDGAR is a Peer in the network.

Setup

1. Preparation

Make sure, you can reach CARL from your target system.

For example, if CARL is hosted at carl.opendut.local, these two commands should work:

ping carl.opendut.local

curl https://carl.opendut.local

If you're self-hosting CARL, follow the instructions in Self-Hosted Backend Server.

2. Download EDGAR

In the LEA web-UI, you can find a Downloads-menu in the sidebar.

You will then need to transfer EDGAR to your target system, e.g. via scp.

Alternatively, you can download directly to your target host with:

curl https://$CARL_HOST/api/edgar/$ARCH/download --output opendut-edgar.tar.gz

Replace $CARL_HOST with the domain where your CARL is hosted (e.g. carl.opendut.local),

and replace $ARCH with the appropriate CPU architecture.

Available CPU architectures are:

x86_64-unknown-linux-gnu(most desktop PCs and server systems)armv7-unknown-linux-gnueabihf(Raspberry Pi)aarch64-unknown-linux-gnu(ARM64 systems)

3. Unpack the archive

Run these commands to unpack EDGAR and change into the directory:

tar xf opendut-edgar.tar.gz

cd opendut-edgar/

EDGAR should print version information, if you run:

./opendut-edgar --version

If this prints an error, it is likely that you downloaded the wrong CPU architecture.

4. CAN Setup

If you want to use CAN, follow the steps in CAN Setup before continuing. If you do not want to use CAN, see Setup without CAN before continuing.

5. mTLS Client Authentication

If your backend requires mTLS Client Authentication, follow the steps in mTLS Client Authentication before continuing.

6. Plugins

Depending on your target hardware, you might want to use plugins to perform additional setup steps. If so, follow the steps in Plugins before continuing.

7. Scripted Setup

EDGAR comes with a scripted setup, which you can initiate by running:

./opendut-edgar setup managed

It will prompt you for a Setup-String. You can get a Setup-String from LEA or CLEO after creating a Peer.

This will configure your operating system and start the EDGAR Service, which will receive its configuration from CARL.

Self-Hosted Backend Server

DNS

If your backend server does not have a public DNS entry, you will need to adjust the /etc/hosts file,

by appending entries like this (replace 123.456.789.101 with your server's IP address):

123.456.789.101 opendut.local

123.456.789.101 carl.opendut.local

123.456.789.101 auth.opendut.local

123.456.789.101 netbird-api.opendut.local

123.456.789.101 netbird-relay.opendut.local

123.456.789.101 signal.opendut.local

123.456.789.101 nginx-webdav.opendut.local

123.456.789.101 opentelemetry.opendut.local

123.456.789.101 monitoring.opendut.local

Now the following command should complete without errors:

ping carl.opendut.local

CAN Setup

If you want to use CAN, it is mandatory to set the environment variable OPENDUT_EDGAR_SERVICE_USER as follows:

export OPENDUT_EDGAR_SERVICE_USER=root

When a cluster is deployed, EDGAR automatically creates a virtual CAN interface (by default: br-vcan-opendut) that is used as a bridge between Cannelloni instances and physical CAN interfaces. EDGAR automatically connects all CAN interfaces defined for the peer in CARL to this bridge interface.

This also works with virtual CAN interfaces, so if you do not have a physical CAN interface and want to test the CAN functionality nevertheless, you can create a virtual CAN interface as follows. Afterwards, you will need to configure it for the peer in CARL.

# Optionally, replace vcan0 with another name

ip link add dev vcan0 type vcan

ip link set dev vcan0 up

Preparation

EDGAR relies on the Linux socketcan stack to perform local CAN routing and uses Cannelloni for CAN routing between EDGARs. Therefore, we have some dependencies.

- Install the following packages:

sudo apt install -y can-utils

- Download Cannelloni from here: https://github.com/eclipse-opendut/cannelloni/releases/

- Unpack the Cannelloni tarball and copy the files into your filesystem like so:

sudo cp libcannelloni-common.so.0 /lib/

sudo cp libsctp.so* /lib/

sudo cp cannelloni /usr/local/bin/

EDGAR will check during the scripted setup, whether these have been installed correctly.

Testing

When you configured everything and deployed the cluster, you can test the CAN connection between different EDGARs as follows:

- Execute on EDGAR leader, assuming the configured CAN interface on it is

can0:candump -d can0 - On EDGAR peer execute (again, assuming can0 is configured here):

Now you should see a can frame on leader side:cansend can0 01a#01020304root@host:~# candump -d can0 can0 01A [4] 01 02 03 04

Setup without CAN

caution

Generally, you do want to setup CAN, since we don't currently support showing to the users, whether an EDGAR has CAN support or not.

Therefore, if you don't set up CAN, you need to document for your users that they cannot use CAN on the given EDGAR.

If they do so anyways, undefined behavior and crashes will likely occur.

If you want to setup an EDGAR without CAN support even after reading the above warning,

you can pass --skip-can on the EDGAR CLI while running the EDGAR Setup.

mTLS Client Authentication

To configure EDGAR, so that it can connect to a backend that requires mTLS Client Authentication, run the following commands:

export OPENDUT_EDGAR_NETWORK_TLS_CLIENT_AUTH_ENABLED=true

export OPENDUT_EDGAR_NETWORK_TLS_CLIENT_AUTH_CERTIFICATE="" #Path or certificate content

export OPENDUT_EDGAR_NETWORK_TLS_CLIENT_AUTH_KEY="" #Path or key content

These values will be persisted into the EDGAR configuration file.

Make sure the certificate and key files are accessible by the opendut_service user

that will be created during the EDGAR Setup.

EDGAR makes client connections to multiple services: OpenDuT CARL, Identity Provider (OIDC) and OpenTelemetry Collector. If you need separate or no certificates and keys for OIDC or OpenTelemetry, you can additionally set the respective variables for OIDC and OpenTelemetry:

export OPENDUT_EDGAR_NETWORK_OIDC_CLIENT_TLS_CLIENT_AUTH_ENABLED=true

export OPENDUT_EDGAR_OPENTELEMETRY_TLS_CLIENT_AUTH_ENABLED=true

The variables ending on _CERTIFICATE and _KEY define different certificates and keys.

Without setting these, the value from OPENDUT_EDGAR_NETWORK_TLS_CLIENT_AUTH_{CERTIFICATE,KEY} will be used.

Plugins

You can use plugins to perform additional setup steps. This guide assumes you already have a set of plugins you want to use.

If so, follow these steps:

- Transfer your plugins archive to the target device.

- Unpack your archive in the

plugins/folder of the unpacked EDGAR distribution. This should result in a directory with one or more.wasmfiles and aplugins.txtfile inside. - Write the path to the unpacked directory into the top-level

plugins.txtfile.

This path can be relative to theplugins.txtfile. The order of the paths in theplugins.txtfile determines the order of execution for the plugins.

Troubleshooting

-

In case of issues during the setup, see the

setup.logfile, which will have been created next to the EDGAR executable. -

It might happen that the NetBird Client started by EDGAR is not able to connect, in that case re-run the EDGAR setup.

-

If this error appears:

ERROR opendut_edgar::service::cannelloni_manager: Failure while invoking command line program 'cannelloni': 'No such file or directory (os error 2)'.

Make sure, you've completed the CAN Setup. -

EDGAR might start with an old IP address, different from what the command

sudo wgprints. In that particular case stop netbird service and opendut-edgar service and re-run the setup. This might happen to all EDGARs. If this is not enough, and it keeps getting the old IP, it is necessary to set up all devices and clusters from scratch.sudo wg

Troubleshooting Guide

Symptom Diagnosis

Symptom: Setup fails

Something failed before the EDGAR Service even started.

See EDGAR Setup Troubleshooting.

Symptom: EDGAR does not show up as online

The web-UI or CLI lists an EDGAR not as healthy.

See Troubleshooting EDGAR offline.

Symptom: The connection does not work

You cannot establish a connection with the ECU(s).

Check the interfaces are configured correctly

Run ip link and check that all of these interfaces exist:

-

br-opendut

EDGAR did not receive or roll out the configuration.

See Troubleshooting EDGAR. -

wt0

The WireGuard tunnel has not been set up correctly by NetBird.

See Troubleshooting VPN connection. -

If you configured an Ethernet interface:

- The Ethernet interface itself.

Should show up withmaster br-opendutin theip linkoutput.

See Troubleshooting the ECUs. gre-...(ending in random letters and numbers)

Should show up withmaster br-opendutin theip linkoutput.

See Troubleshooting configuration rollout.

- The Ethernet interface itself.

-

If you configured a CAN interface:

- The CAN interface itself.

See Troubleshooting the ECUs. br-vcan-opendut

See Troubleshooting EDGAR.

- The CAN interface itself.

-

gre0,gretap0anderspan0

The Generic Routing Encapsulation (GRE) has not been setup correctly.

See Troubleshooting EDGAR.

If it is still not working, check if theip_greandgrekernel modules are available and can be loaded.

Ping throughout the connection

- Ping

wt0as described in Troubleshooting VPN connection. - Ping

br-opendut:- Assign an IP address to

br-openduton each device, for example:

The IP addresses have to be in the same subnet.ip address add 192.168.123.101/24 dev br-opendut #on one device ip address add 192.168.123.102/24 dev br-opendut #on the other device - Ping the assigned IP address of the other device:

If the ping works, the connection via openDuT should work. If it still does not, see Troubleshooting the ECUs.ping 192.168.123.102 #on one device ping 192.168.123.101 #on the other device

- Assign an IP address to

Troubleshooting

Troubleshooting EDGAR

-

If the setup completed, but EDGAR does not show up as Healthy in LEA/CLEO, see the service logs:

journalctl -u opendut-edgarYou can configure the log level of EDGAR via the environment variable

OPENDUT_LOG, by setting it in the SystemD file.

For example, to only show INFO logging and above, set it asOPENDUT_LOG=info.

For more fine-grained control, see the documentation here: https://docs.rs/tracing-subscriber/latest/tracing_subscriber/filter/struct.EnvFilter.html#directives -

Sometimes it helps to restart the EDGAR service:

# Restart service sudo systemctl restart opendut-edgar # Check status systemctl status opendut-edgar -

Try rebooting the operating system. This clears out the interfaces, forcing EDGAR and NetBird to recreate them.

-

When the configuration does not get rolled out, it can help to redeploy the cluster. In the web-UI or CLI, undeploy the cluster and then re-deploy it shortly after.

Troubleshooting VPN connection

-

See

/opt/opendut/edgar/netbird/netbird status --detail. The remote peers should be listed as "Connected". -

Check the NetBird logs for errors:

cat /var/lib/netbird/client.log cat /var/lib/netbird/netbird.err cat /var/lib/netbird/netbird.out -

Try pinging

wt0between devices. Runip address show wt0on the one device and copy the IP address. Then runping $IP_ADDRESSon the other device, with$IP_ADDRESSreplaced with the IP address.

Troubleshooting the ECUs

It happens relatively often that we look for problems in openDuT, when the ECU or the wiring isn't working.

Here's some questions to help with that process:

- Is the ECU powered on?

- Is the ECU wired correctly?

- Is the ECU hooked up to the port of the edge device that you configured in openDuT?

- Does the setup work, if you replace openDuT with a physical wire?

User Manual for CLEO

CLEO is a CLI tool to create/read/update/delete resources in CARL.

By using a terminal you will be able to configure your resources via CLEO.

CLEO can currently access the following resources:

- Cluster descriptors

- Cluster deployments

- Peers

- Devices (DuTs)

- Container executors

Every resource can be created, listed, described and deleted. Some have additional features such as an option to generate a setup-key or search through them.

In general, CLEO offers a help command to display usage information about a command. Just use opendut-cleo help or opendut-cleo <subcommand> --help.

Setup for CLEO

- Download the opendut-cleo binary for your target from the openDuT GitHub project: https://github.com/eclipse-opendut/opendut/releases

- Unpack the archive on your target system.

- Add a configuration file

/etc/opendut/cleo.toml(Linux) and configure at least the CARL host+port. The possible configuration values and their defaults can be seen here:

[network]

carl.host = "localhost"

carl.port = 8080

[network.tls]

ca = "/etc/opendut/tls/ca.pem"

domain.name.override = ""

[network.tls.client.auth] #mTLS

enabled = false

certificate = "/etc/opendut/tls/client-auth.pem"

key = "/etc/opendut/tls/client-auth.key"

[network.oidc]

enabled = false

[network.oidc.client]

id = "opendut-cleo-client"

issuer.url = "https://auth.opendut.local/realms/opendut/"

scopes = "openid,profile,email"

secret = "<tbd>"

[network.oidc.client.tls]

ca = ""

[network.oidc.client.tls.client.auth] #mTLS

enabled = "unset" # use default defined in network.tls.client.auth, otherwise set this to 'true' or 'false'

certificate = ""

key = ""

[logging.pipe]

enabled = true

stream = "stderr"

Download CLEO from CARL

It is also possible to download CLEO from one of CARLs endpoints. The downloaded file contains the binary for CLEO for the requested architecture, the necessary certificate file, as well as a setup script.

The archive can be requested at https://{CARL-HOST}/api/cleo/{architecture}/download.

Available architectures are:

- x86_64-unknown-linux-gnu

- armv7-unknown-linux-gnueabihf

- aarch64-unknown-linux-gnu

This might be the go-to way, if you want to use CLEO in your pipeline.

Once downloaded, extract the files with the command tar xvf opendut-cleo-{architecture}.tar.gz. It will then be extracted into

the folder which is the current work directory. You might want to use another directory of your choice.

Setup via CLEO command (recommended)

A Setup-String can be retrieved from LEA and used with the following command. The command will prompt you for the Setup-String after you run it.

opendut-cleo setup --persistent=<type>

The persistent flag is optional. Without the flag, the needed environment variables will be printed out to the terminal.

If the persistent flag is set to user or without a value, a configuration file will be written to ~/.config/opendut/cleo/config.toml,

with it being set to system the cleo configuration file will be written to /etc/opendut/cleo.toml.

Setup via script

The script used to run CLEO will not set the environment variables for CLIENT_ID and CLIENT_SECRET. This has to be done by the users manually. This can easily be done by entering the following commands:

export OPENDUT_CLEO_NETWORK_OIDC_CLIENT_ID={{ CLIENT ID VARIABLE }}

export OPENDUT_CLEO_NETWORK_OIDC_CLIENT_SECRET={{ CLIENT SECRET VARIABLE }}

These two variables can be obtained by logging in to Keycloak.

The tarball contains the cleo-cli.sh shell script. When executed it starts CLEO after setting the

following environment variables:

OPENDUT_CLEO_NETWORK_OIDC_CLIENT_SCOPES

OPENDUT_CLEO_NETWORK_TLS_DOMAIN_NAME_OVERRIDE

OPENDUT_CLEO_NETWORK_TLS_CA

OPENDUT_CLEO_NETWORK_CARL_HOST

OPENDUT_CLEO_NETWORK_CARL_PORT

OPENDUT_CLEO_NETWORK_OIDC_ENABLED

OPENDUT_CLEO_NETWORK_OIDC_CLIENT_ISSUER_URL

SSL_CERT_FILE

SSL_CERT_FILE is a mandatory environment variable for the current state of the implementation and has the same value as the

OPENDUT_CLEO_NETWORK_TLS_CA. This might change in the future.

Using CLEO with parameters works by adding the parameters when executing the script, e.g.:

./cleo-cli.sh list peers

TL;DR

- Download archive from

https://{CARL-HOST}/api/cleo/{architecture}/download - Extract

tar xvf opendut-cleo-{architecture}.tar.gz - Add two environment variable

export OPENDUT_CLEO_NETWORK_OIDC_CLIENT_ID={{ CLIENT ID VARIABLE }}andexport OPENDUT_CLEO_NETWORK_OIDC_CLIENT_SECRET={{ CLIENT SECRET VARIABLE }} - Execute

cleo-cli.shwith parameters

Additional notes

- The CA certificate to be provided for CLEO depends on the used certificate authority used on server side for CARL.

Auto-Completion

You can use auto-completions in CLEO, which will fill in commands when you press TAB.

To set them up, run opendut-cleo completions SHELL where you need to replace SHELL with the shell that you use, e.g. bash, zsh or fish.

Then you need to pipe the output into a completions-file for your shell. See your shell's documentation for where to place these files.

Commands

Listing resources

To list resources you can decide whether to display the resources in a table or in JSON-format.

The default output format is a table which is displayed by not using the --output flag.

The --output flag is a global argument, so it can be used at any place in the command.

opendut-cleo list --output=<format> <openDuT-resource>

Creating resources

To create resources it depends on the type of resource whether an ID or connected devices have to be added to the command.

opendut-cleo create <resource>

Applying Configuration Files

To use configuration files, the resource topology can be written in a YAML format which can be applied with the following command:

opendut-cleo apply <FILE_PATH>

The YAML file can look like this:

---

version: v1

kind: PeerDescriptor

metadata:

id: fc4f8da1-1d99-47e1-bbbb-34d0c5bf922a

name: MyPeer

spec:

location: Ulm

network:

interfaces:

- id: 9a182365-47e8-49e3-9b8b-df4455a3a0f8

name: eth0

kind: ethernet

- id: de7d7533-011a-4823-bc51-387a3518166c

name: can0

kind: can

parameters:

bitrate-kbps: 250

sample-point: 0.8

fd: true

data-bitrate-kbps: 500

data-sample-point: 0.8

topology:

devices:

- id: d6cd3021-0d9f-423c-862e-f30b29438cbb

name: ecu1

description: ECU for controlling things.

interface-id: 9a182365-47e8-49e3-9b8b-df4455a3a0f8

tags:

- ecu

- automotive

- id: fc699f09-1d32-48f4-8836-37e0a23cf794

name: restbus-sim1

description: Rest-Bus-Simulation for simulating other ECUs.

interface-id: de7d7533-011a-4823-bc51-387a3518166c

tags:

- simulation

executors:

- id: da6ad5f7-ea45-4a11-aadf-4408bdb69e8e

kind: container

parameters:

engine: podman

name: nmap-scan

image: debian

volumes:

- /etc/

- /opt/

devices:

- ecu1

- restbus-sim1

envs:

- name: VAR_NAME

value: varValue

ports:

- 8080:8080

command: nmap

command-args:

- -A

- -T4

- scanme.nmap.org

---

kind: ClusterDescriptor

version: v1

metadata:

id: f90ffd64-ae3f-4ed4-8867-a48587733352

name: MyCluster

spec:

leader-id: fc4f8da1-1d99-47e1-bbbb-34d0c5bf922a

devices:

- d6cd3021-0d9f-423c-862e-f30b29438cbb

- fc699f09-1d32-48f4-8836-37e0a23cf794

The id fields contain UUIDs. You can generate a random UUID when newly creating a resource with the opendut-cleo create uuid command.

Generating PeerSetup Strings

To create a PeerSetup, it is necessary to provide the PeerID of the peer:

opendut-cleo generate-setup-string <PeerID>

Decoding PeerSetup Strings

If you have a peer setup string, and you want to analyze its content, you can use the decode command.

opendut-cleo decode-setup-string <String>

Describing resources

To describe a resource, the ID of the resource has to be provided. The output can be displayed as text or JSON-format (pretty-json with line breaks or json without).

opendut-cleo describe --output=<output format> <resource> --id

Finding resources

You can search for resources by specifying a search criteria string with the find command. Wildcards such as '*' are also supported.

opendut-cleo find <resource> "<at least one search criteria>"

Delete resources

Specify the type of resource and its ID you want to delete in CARL.

opendut-cleo delete <resource> --id <ID of resource>

Usage Examples

CAN Example

# CREATE PEER

opendut-cleo create peer --name "$NAME" --location "$NAME"

# CREATE NETWORK INTERFACE

opendut-cleo create network-interface --peer-id "$PEER_ID" --type can --name vcan0

# CREATE DEVICE

opendut-cleo create device --peer-id "$PEER_ID" --name device-"$NAME"-vcan0 --interface vcan0

# CREATE SETUP STRING

opendut-cleo generate-setup-string --id "$PEER_ID"

Ethernet Example

# CREATE PEER

opendut-cleo create peer --name "$NAME" --location "$NAME"

# CREATE NETWORK INTERFACE

opendut-cleo create network-interface --peer-id "$PEER_ID" --type eth --name eth0

# CREATE DEVICE

opendut-cleo create device --peer-id "$PEER_ID" --name device-"$NAME"-eth0 --interface eth0

# CREATE SETUP STRING

opendut-cleo generate-setup-string --id "$PEER_ID"

CLEO and jq

jq is a command line tool to pipe outputs from json into pretty json or extract values. That is how jq can automate cli-applications.

Basic jq

- jq -r removes " from strings.

- [] constructs an array

- {} constructs an object

e.g. jq '[ { "name:" .[].name, "id:" .[].id } ]' or: jq '[ .[] | { title, name } ]'

input

opendut-cleo list --output=pretty-json peers

output

This output will be exemplary for the following jq commands.

[

{

"name": "HelloPeer",

"id": "90dfc639-4b4a-4bbb-bad3-6f037fcde013",

"status": "Disconnected"

},

{

"name": "Edgar",

"id": "defe10bb-a12a-4ad9-b18e-8149099dd044",

"status": "Connected"

},

{

"name": "SecondPeer",

"id": "c3333d4e-9b1a-4db5-9bfa-7a0a40680f1a",

"status": "Disconnected"

}

]

input

opendut-cleo list --output=json peers | jq '[.[].name]'

output

jq extracts the names of every json element in the list of peers.

[

"HelloPeer",

"Edgar",

"SecondPeer"

]

which can also be put into an array with cleo list --output=json peers | jq '[.[].name']

input

opendut-cleo list --output=json peers | jq '[.[] | select(.status=="Disconnected")]'

output

[

{

"name": "HelloPeer",

"id": "90dfc639-4b4a-4bbb-bad3-6f037fcde013",

"status": "Disconnected"

},

{

"name": "SecondPeer",

"id": "c3333d4e-9b1a-4db5-9bfa-7a0a40680f1a",

"status": "Disconnected"

}

]

input

opendut-cleo list --output=json peers | jq '.[] | select(.status=="Connected") | .id' | xargs -I{} cleo describe peer -i {}

output

Peer: Edgar

Id: defe10bb-a12a-4ad9-b18e-8149099dd044

Devices: [device-1, The Device, Another Device, Fubar Device, Lost Device]

Get the number of the peers

opendut-cleo list --output=json peers | jq 'length'

Sort peers by name

opendut-cleo list --output=json peers | jq 'sort_by(.name)'

Configuration

This is a high-level guide for how to configure openDuT applications, including useful tips and tricks.

Setting values

The configuration can be set via environment variables or by manually creating a configuration file, e.g. under /etc/opendut/carl.toml.

The environment variables use the TOML keys from the configuration file in capital letters, joined by underscores and prefixed by the application name.

For example, to configure network.bind.host in CARL, use the environment variable OPENDUT_CARL_NETWORK_BIND_HOST.

See the end of this file for the configuration file format.

TLS certificates

When configuring a TLS certificate/key, you can provide either a file path or the text of the certificate directly. The latter is useful in particular when working with environment variables.

You can provide separate CA certificates for individual backend services, namely OpenTelemetry, NetBird and OIDC.

If you do not do so, the CA certificate from network.tls.ca will be used as the default.

Log level

You can configure the log level via the environment variable OPENDUT_LOG.

For example, to only show INFO logging and above, set it as OPENDUT_LOG=info.

For more fine-grained control, see the documentation here: https://docs.rs/tracing-subscriber/latest/tracing_subscriber/filter/struct.EnvFilter.html#directives

Configuration file format

These are example configurations of the different applications, together with their default values.

CARL

[network]

bind.host = "0.0.0.0"

bind.port = 8080

remote.host = "localhost"

remote.port = 8080

[network.tls]

enabled = true

certificate = "/etc/opendut/tls/carl.pem"

key = "/etc/opendut/tls/carl.key"

ca = "/etc/opendut/tls/ca.pem"

# use client certificate when connecting to other services (identity provider, opentelemetry collector, in the future also NetBird)

[network.tls.client.auth] #mTLS

enabled = false

certificate = "/etc/opendut/tls/client-auth.pem"

key = "/etc/opendut/tls/client-auth.key"

# validate certificates of connecting clients with the given certificate authority

[network.tls.server.auth] #mTLS

enabled = false

ca = "/etc/opendut/tls/ca.pem"

[network.oidc]

enabled = false

[network.oidc.client]

id = "tbd"

secret = "tbd"

# issuer url that CARL uses

issuer.url = "https://auth.opendut.local/realms/opendut/"

# issuer url that CARL tells the clients to use (required in test environment)

issuer.remote.url = "https://auth.opendut.local/realms/opendut/"

issuer.admin.url = "https://auth.opendut.local/admin/realms/opendut/"

scopes = ""

[network.oidc.client.tls]

ca = ""

[network.oidc.client.tls.client.auth] #mTLS

enabled = "unset" # use default defined in network.tls.client.auth, otherwise set this to 'true' or 'false'

certificate = ""

key = ""

[network.oidc.lea]

client.id = "opendut-lea-client"

issuer.url = "https://auth.opendut.local/realms/opendut/"

scopes = "openid,profile,email"

[persistence]

enabled = false

[persistence.database]

file = ""

[peer]

disconnect.timeout.ms = 30000

can.server_port_range_start = 10000

can.server_port_range_end = 20000

ethernet.bridge.name.default = "br-opendut"

[serve]

ui.directory = "opendut-lea/"

[vpn]

enabled = true

kind = ""

[vpn.netbird]

url = ""

ca = ""

auth.type = "oauth-create-api-token"

auth.secret = ""

# only for OIDC

auth.issuer = ""

auth.username = "netbird"

auth.password = ""

auth.scopes = ""

# retry requests to the NetBird API

timeout.ms = 10000

retries = 5

setup.key.expiration.ms = 86400000

[logging.pipe]

enabled = true

stream = "stdout"

[opentelemetry]

enabled = false

collector.endpoint = ""

service.name = "opendut-carl"

[opentelemetry.tls]

ca = ""

[opentelemetry.tls.client.auth] #mTLS

enabled = "unset" # use default defined in network.tls.client.auth, otherwise set this to 'true' or 'false'

certificate = ""

key = ""

[opentelemetry.metrics]

interval.ms = 60000

cpu.collection.interval.ms = 5000

EDGAR

[carl]

disconnect.timeout.ms = 30000

[peer]

id = ""

[network]

carl.host = "localhost"

carl.port = 8080

connect.retries = 10

connect.interval.ms = 5000

[network.tls]

ca = "/etc/opendut/tls/ca.pem"

domain.name.override = ""

[network.tls.client.auth] #mTLS

enabled = false

certificate = "/etc/opendut/tls/client-auth.pem"

key = "/etc/opendut/tls/client-auth.key"

[network.oidc]

enabled = false

[network.oidc.client]

id = "opendut-edgar-client"

issuer.url = "https://auth.opendut.local/realms/opendut/"

scopes = "openid,profile,email"

secret = "<tbd>"

[network.oidc.client.tls]

ca = ""

[network.oidc.client.tls.client.auth] #mTLS

enabled = "unset" # use default defined in network.tls.client.auth, otherwise set this to 'true' or 'false'

certificate = ""

key = ""

[network.interface.management]

enabled = true

[vpn]

enabled = true

[vpn.disabled]

remote.host = ""

[vpn.netbird.client]

log.level = "WARN"

[logging.pipe]

enabled = true

stream = "stdout"

[opentelemetry]

enabled = false

collector.endpoint = ""

service.name = "opendut-edgar"

[opentelemetry.tls]

ca = ""

[opentelemetry.tls.client.auth] #mTLS

enabled = "unset" # use default defined in network.tls.client.auth, otherwise set this to 'true' or 'false'

certificate = ""

key = ""

[opentelemetry.metrics]

interval.ms = 60000

cpu.collection.interval.ms = 5000

[opentelemetry.metrics.cluster]

ping.interval.ms = 30000

target.bandwidth.kilobit.per.second = 100_000

rperf.backoff.max.elapsed.time.ms = 120000

CLEO

[network]

carl.host = "localhost"

carl.port = 8080

[network.tls]

ca = "/etc/opendut/tls/ca.pem"

domain.name.override = ""

[network.tls.client.auth] #mTLS

enabled = false

certificate = "/etc/opendut/tls/client-auth.pem"

key = "/etc/opendut/tls/client-auth.key"

[network.oidc]

enabled = false

[network.oidc.client]

id = "opendut-cleo-client"

issuer.url = "https://auth.opendut.local/realms/opendut/"

scopes = "openid,profile,email"

secret = "<tbd>"

[network.oidc.client.tls]

ca = ""

[network.oidc.client.tls.client.auth] #mTLS

enabled = "unset" # use default defined in network.tls.client.auth, otherwise set this to 'true' or 'false'

certificate = ""

key = ""

[logging.pipe]

enabled = true

stream = "stderr"

Test Execution

In a nutshell, test execution in openDuT works by executing containerized (Docker or Podman) test applications on a peer and uploading the results to a WebDAV directory. Test executors can be configured through either CLEO or LEA.

The container image specified by the image parameter in the test executor configuration can either be a

container image already present on the peer or an image remotely available, e.g., in the Docker Hub.

A containerized test application is expected to move all test results to be uploaded to the /results/ directory

within its container and create an empty file /results/.results_ready when all results have been copied there.

When this file exists, or when the container exits and no results have been uploaded yet,

EDGAR creates a ZIP archive from the contents of the /results directory and uploads it to the WebDAV server

specified by the results-url parameter in the test executor configuration.

In the testenv launched by THEO, a WebDAV server is started automatically and can be reached at http://nginx-webdav/.

In the Local Test Environment,

a WebDAV server is also started automatically and reachable at http://nginx-webdav.opendut.local.

Note that the execution of executors is only triggered by deploying the cluster.

Test Execution using CLEO

In CLEO, test executors can be configured either by passing all configuration parameters as command line arguments...

$ opendut-cleo create container-executor --help

Create a container executor using command-line arguments

Usage: opendut-cleo create container-executor [OPTIONS] --peer-id <PEER_ID> --engine <ENGINE> --image <IMAGE>

Options:

--peer-id <PEER_ID> ID of the peer to add the container executor to

-e, --engine <ENGINE> Engine [possible values: docker, podman]

-n, --name <NAME> Container name

-i, --image <IMAGE> Container image

-v, --volumes <VOLUMES>... Container volumes

--devices <DEVICES>... Container devices

--envs <ENVS>... Container envs

-p, --ports <PORTS>... Container ports

-c, --command <COMMAND> Container command

-a, --args <ARGS>... Container arguments

-r, --results-url <RESULTS_URL> URL to which results will be uploaded

-h, --help Print help

...or by providing the executor as part of a YAML file via opendut-cleo apply.

See Applying Configuration Files for more information.

Test Execution Through LEA

In LEA, executors can be configured via the tab Executor during peer configuration, using similar parameters as for CLEO.

Developer Manual

Learn how to get started, the workflow and tools we use, and what our architecture looks like.

Getting Started

Development Setup

Install the Rust toolchain: https://www.rust-lang.org/tools/install

You may need additional dependencies. On Ubuntu/Debian, these can be installed with:

sudo apt install build-essential pkg-config libssl-dev

To see if your development setup is generally working, you can run cargo ci check in the project directory.

Mind that this runs the unit tests and additional code checks and may occasionally show warnings/errors related to those, rather than pure build errors.

Tips & Tricks

-

cargo cicontains many utilities for development in general. -

To view this documentation fully rendered, run

cargo ci doc book open. -

To have your code validated more extensively, e.g. before publishing your changes, run

cargo ci check.

Starting Applications

- Run CARL (backend):

cargo carl

You can then open the UI by going to https://localhost:8080/ in your web browser.

-

Run CLEO (CLI for managing CARL):

cargo cleo -

Run EDGAR (edge software):

cargo edgar service

caution

Mind that this is in a somewhat broken state and may be removed in the future,

as it's normally necessary to add the peer in CARL and then go through edgar setup.

For a more realistic environment, see test-environment.

UI Development

Run cargo lea to continuously build the newest changes in the LEA codebase.

Then you can simply refresh your browser to see them.

Git Workflow

Pull requests

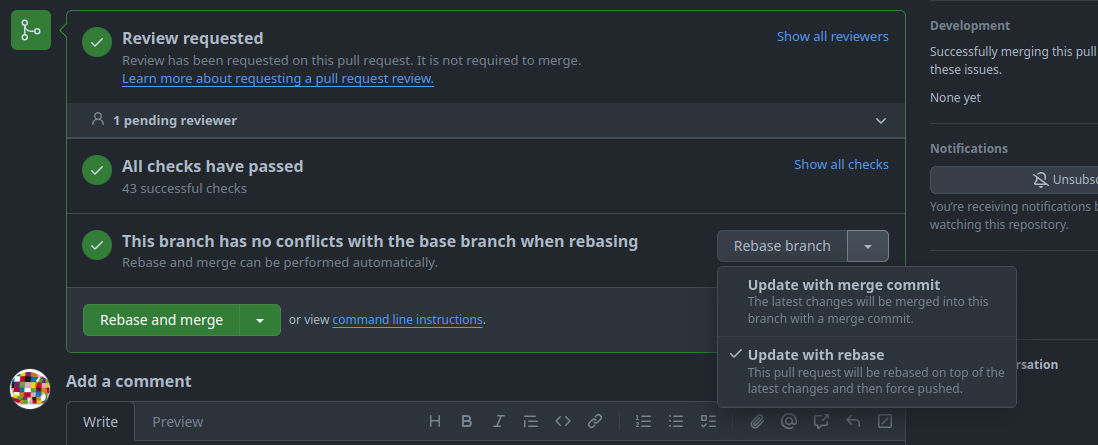

Update Branch

Our goal is to maintain a linear Git history. Therefore, we prefer git rebase over git merge1. The same applies when using the GitHub WebUI to update a PR's branch.

-

Update with merge commit:

The first option creates a merge commit to pull in the changes from the PR's target branch and this is against our goal of a linear history, so we do not use this option.

-

Update with rebase:

The second option rebases the changes of the feature branch on top of the PR's target branch. This is the preferred option we use.

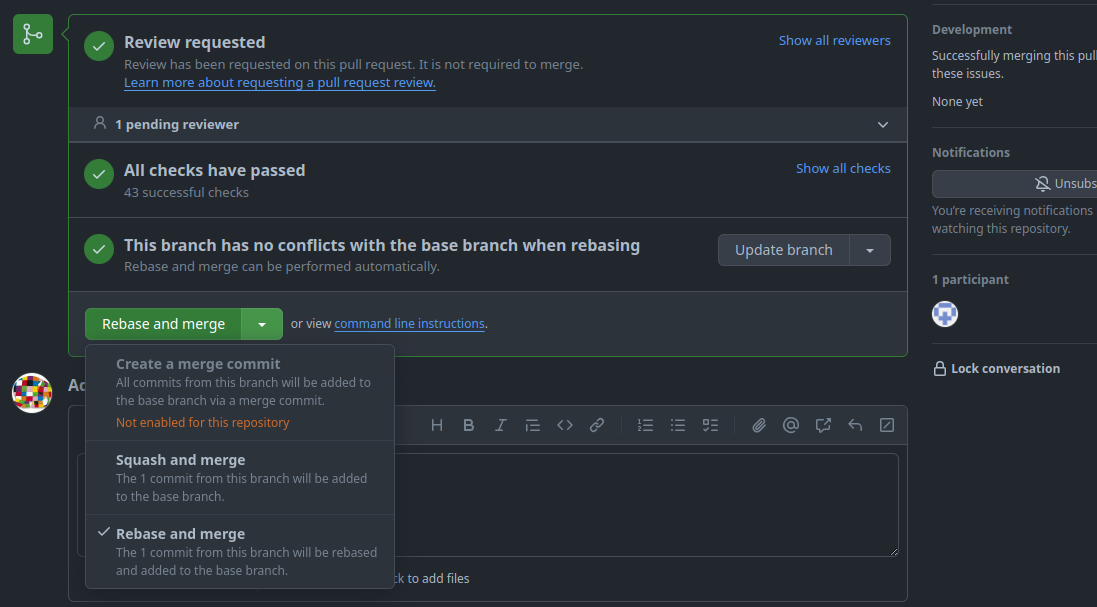

Rebase and Merge

As said above, our goal is to maintain a linear Git history. A problem arises when we want to merge pull requests (PR), because the GitHub WebUI offers ineligible options to merge a branch:

-

Create a merge commit:

The first option creates a merge commit to pull in the changes from the PR's branch and this is against our goal of a linear history, so we disabled this option.

-

Squash and merge:

The second option squashes all commits in the PR into a single commit and adds it to the PR's target branch. With this option, it is not possible to keep all commits of the PR separately.

-

Rebase and merge:

The third option rebases the changes of the feature branch on top of the PR's target branch. This would be our preferred option, but "The rebase and merge behavior on GitHub deviates slightly from

git rebase. Rebase and merge on GitHub will always update the committer information and create new commit SHAs"2. This doubles the number of commits and spams the history unnecessarily. Therefore, we do not use this option either.

The only viable option for us is to rebase and merge the changes via the command line. The procedures slightly differ according to the location of the feature branch.

Feature branch within the same repository

This example illustrates the procedure to merge a feature branch fubar into a target branch development.

-

Update the target branch with the latest changes.

git pull --rebase origin development -

Switch to the feature branch.

git checkout fubar -

Rebase the changes of the feature branch on top of the target branch.

git rebase developmentThis is a good moment to run test and validation tasks locally to verify the changes.

-

Switch to the target branch.

git checkout development -

Merge the changes of the feature branch into the target branch.

git merge --ff-only fubarThe

--ff-onlyargument at this point is optional, because we rebased the feature branch and git automatically detects, that a fast-forward is possible. But this flag prevents a merge-commit, if we messed-up one of the previous steps. -

Push the changes.

git push origin development

Feature branch of a fork repository

This example illustrates the procedure to merge a feature branch foo from a fork bar of the user doe into a target branch development.

-

Update the target branch with the latest changes.

git pull --rebase origin development -

From the project repository, check out a new branch.

git checkout -b doe-foo development -

Pull in the changes from the fork.

git pull git@github.com:doe/bar.git foo -

Rebase the changes of the feature branch on top of the target branch.

git rebase developmentThis is a good moment to run test and validation tasks locally to verify the changes.

-

Switch to the target branch.

git checkout development -

Merge the changes of the feature branch into the target branch.

git merge --ff-only fubarThe

--ff-onlyargument at this point is optional, because we rebased the feature branch and git automatically detects, that a fast-forward is possible. But this flag prevents a merge-commit if we messed-up one of the previous steps. -

Push the changes.

git push origin development

-

Except

git merge --ff-only. ↩ -

Github; About pull requests; Rebasing and merging your commits ↩

Testing

- Run tests with

cargo ci check.

Integration tests

There are special test cases in the code base that try to interact with a service run in the test environment. These tests only run when the respective service is started.

Those tests are flagged as such using the crate test-with and assume the presence or absence of an environment variable:

- The following test is only run if the environment variable

OPENDUT_RUN_<service-name-in-upper-case>_INTEGRATION_TESTSis present:#![allow(unused)] fn main() { #[test_with::env(OPENDUT_RUN_KEYCLOAK_INTEGRATION_TESTS)] #[test] fn test_communication_with_service_works() { assert!(true); } }

See Run OpenDuT integration tests for details.

Running tests with logging

By default, log output is suppressed when running tests.

To see log output when running tests, mark your test with the #[test_log::test] attribute instead of the standard #[test] attribute, for example:

rust #[test_log::test] fn it_still_works() { // ... }

And set the environment variable RUST_LOG to the desired log level before running the tests.

To see all log messages at the debug level and above, you can then run:

shell RUST_LOG=debug cargo ci check

Test Environment

openDuT can be tricky to test, as it needs to modify the operating system to function and supports networking in a distributed setup.

To aid in this, we offer a virtualized test environment for development.

This test environment is set up with the help of a command line tool called theo.

THEO stands for Test Harness Environment Operator.

It is recommended to start everything in a virtual machine, but you may also start the service on the host with docker compose if applicable.

Setup of the virtual machine is done with Vagrant, Virtualbox and Ansible.

The following services are included in docker:

- CARL (OpenDuT backend software)

- EDGAR (OpenDuT edge software)

- firefox container for UI testing (accessible via http://localhost:3000)

- includes certificate authorities and is running in headless mode

- is running in same network as carl and edgar (working DNS resolution!)

- NetBird Third-party software that provides WireGuard based VPN

- Keycloak Third-party software that provides authentication and authorization

Operational modes

There are two ways of operation for the test environment:

Test mode

Run everything in Docker (Either on your host or preferable in a virtual machine). You may use the OpenDuT Browser to access the services. The OpenDuT Browser is a web browser running in a docker container in the same network as the other services. All certificates are pre-installed and the browser is running in headless mode. It is accessible from your host via http://localhost:3000.

Development mode

Run CARL on the host in your development environment of choice and the rest in Docker. In this case there is a proxy running in the docker environment. It works as a drop-in replacement for CARL in the docker environment, which is forwarding the traffic to CARL running in an integrated development environment on the host.

Getting started

Follow the setup steps. Then you may start the test environment in the virtual machine or in plain docker.

- And use it in test mode

- Or use it in development mode.

- If you want to build the project in the virtual machine you may also want to give it more resources (cpu/memory).

There are some known issues with the test environment (most of them on Windows):

Set up the test environment

Notes about the virtual machine

There are some important adaptions made to the virtual machine that you should be aware of.

caution

Within the VM the rust target directory CARGO_TARGET_DIR is overridden to /home/vagrant/rust-target.

When running cargo within the VM, output will be placed in this directory!

This is done to avoid issues with hardlinks when cargo is run on a filesystem that does not support them (like vboxsf, the VirtualBox shared folder filesystem).

There is one exception to this. The distribution build with cargo-ci is placed in a subdirectory of the project, namely target/ci/distribution.

The temporary files of cargo-cross are still placed in /home/vagrant/rust-target, but the final build artifacts are copied to target/ci/distribution within the project directory.

This should be fine since cargo-cross is building everything in docker anyway.

Furthermore, we use cicero for installing project dependencies like trunk and cargo-cross.

To avoid linking issues with binaries installed by cicero, we also set up a dedicated virtual environment for cicero in the VM.

This is done by overwriting the CICERO_VENV_INSTALL_DIR environment variable to /home/vagrant/.cache/opendut/cicero/.

Setup THEO on Linux in VM

You may run all the containers in a virtual machine, using Vagrant.

This is the recommended way to run the test environment.

It will create a private network (subnet 192.168.56.0/24).

The virtual machine itself has the IP address: 192.168.56.10.

The docker network for the backend has the IP subnet: 192.168.32.0/24.

The docker network for the EDGAR peers has the IP subnet: 192.168.38.0/24.

Make sure those network addresses are not occupied or in conflict with other networks accessible from your machine.

Requirements

-

Install Vagrant

Ubuntu / Debian

sudo apt install vagrantOn most other Linux distributions, the package is called

vagrant. If the package is not available for your distribution, you may need to add a package repository as described here: https://developer.hashicorp.com/vagrant/install#linux -

Install VirtualBox (see https://www.virtualbox.org)

sudo apt install virtualboxTo get a version compatible with Vagrant, you may need to add the APT repository as described here: https://www.virtualbox.org/wiki/Linux_Downloads#Debian-basedLinuxdistributions

-

Create or check if an SSH key pair is present in

~/.ssh/id_rsamkdir -p ~/.ssh ssh-keygen -t rsa -b 4096 -C "opendut-vm" -f ~/.ssh/id_rsaYou can create a symlink to your existing key as well.

Setup virtual machine

-

Either via cargo:

cargo theo vagrant up -

Login to the virtual machine

cargo theo vagrant ssh -

Update /etc/hosts on your host machine

192.168.56.10 opendut.local 192.168.56.10 carl.opendut.local 192.168.56.10 auth.opendut.local 192.168.56.10 netbird-api.opendut.local 192.168.56.10 netbird-relay.opendut.local 192.168.56.10 signal.opendut.local 192.168.56.10 nginx-webdav.opendut.local 192.168.56.10 opentelemetry.opendut.local 192.168.56.10 monitoring.opendut.local

Setup THEO on Windows in VM

This guide will help you set up THEO on Windows.

Requirements

The following instructions use chocolatey to install the required software.

If you don't have chocolatey installed, you can find the installation instructions here.

You may also install the required software manually or e.g. use the Windows Package Manager winget (Hashicorp.Vagrant, Oracle.VirtualBox, Git.Git).

-

Install vagrant and virtualbox

choco install -y vagrant virtualbox -

Install git and configure git to respect line endings

choco install git.install --params "'/GitAndUnixToolsOnPath /WindowsTerminal'" -

Create or check if a ssh key pair is present in

~/.ssh/id_rsamkdir -p ~/.ssh ssh-keygen -t rsa -b 4096 -C "opendut-vm" -f ~/.ssh/id_rsa

Info

Vagrant creates a VM which mounts a Windows file share on/vagrant, where the openDuT repository was cloned. The openDuT project contains bash scripts that would break if the end of line conversion tocrlfon windows would happen. Therefore a .gitattributes file containing

*.sh text eol=lf

was added to the repository in order to make sure the bash scripts also keep the eol=lfwhen cloned on Windows. As an alternative, you may consider using the cloned opendut repo on the Windows host only for the vagrant VM setup part. For working with THEO, you can use the cloned opendut repository inside the Linux guest system instead (/home/vagrant/opendut).

Setup virtual machine

- Add the following environment variables to point vagrant to the vagrant file

Git Bash:

PowerShell:export OPENDUT_REPO_ROOT=$(git rev-parse --show-toplevel) export VAGRANT_DOTFILE_PATH=$OPENDUT_REPO_ROOT/.vagrant export VAGRANT_VAGRANTFILE=$OPENDUT_REPO_ROOT/.ci/deploy/opendut-vm/Vagrantfile$env:OPENDUT_REPO_ROOT=$(git rev-parse --show-toplevel) $env:VAGRANT_DOTFILE_PATH="$env:OPENDUT_REPO_ROOT/.vagrant" $env:VAGRANT_VAGRANTFILE="$env:OPENDUT_REPO_ROOT/.ci/deploy/opendut-vm/Vagrantfile" - Set up the vagrant box (following commands were tested in Git Bash and Powershell)

vagrant up

Info

If the virtual machine is not allowed to create or use a private network you may disable it by setting the environment variableOPENDUT_DISABLE_PRIVATE_NETWORK=true.

- Connect to the virtual machine via ssh (requires the environment variables)

vagrant ssh

Additional notes

You may want to configure an http proxy or a custom certificate authority. Details are in the Advanced usage section.

Setup THEO in Docker

Requirements

-

Install Docker

Ubuntu / Debian

sudo apt install docker.ioOn most other Linux distributions, the package is called

docker. -

Install Docker Compose v2

Ubuntu / Debian

sudo apt install docker-compose-v2Alternatively, see https://docs.docker.com/compose/install/linux/.

-

Add your user into the

dockergroup, to be allowed to use Docker commands without root permissions. (Mind that this has security implications.)sudo groupadd docker # create `docker` group, if it does not exist sudo gpasswd --add $USER docker # add your user to the `docker` group newgrp docker # attempt to activate group without re-loginYou may need to log out your user account and log back in for this to take effect.

-

Update /etc/hosts

127.0.0.1 opendut.local 127.0.0.1 carl.opendut.local 127.0.0.1 auth.opendut.local 127.0.0.1 netbird-api.opendut.local 127.0.0.1 netbird-relay.opendut.local 127.0.0.1 signal.opendut.local 127.0.0.1 nginx-webdav.opendut.local 127.0.0.1 opentelemetry.opendut.local 127.0.0.1 monitoring.opendut.local

Start testing

Once you have set up and started the test environment, you may start testing the services.

User interface

The OpenDuT Browser is a web browser running in a docker container. It is based on KasmVNC base image which allows containerized desktop applications from a web browser. A port forwarding is in place to access the browser from your host. It has all the necessary certificates pre-installed and is running in headless mode. You may use this OpenDuT Browser to access the services.

- Open following address in your browser: http://localhost:3000

- Passwords of users in test environment are generated.

You can find them in the file

.ci/deploy/localenv/data/secrets/.env. - Services with user interface:

- https://carl.opendut.local

- https://auth.opendut.local

- https://monitoring.opendut.local

Use virtual machine for testing

This mode is used to test a distribution of OpenDuT.

-

Ensure a distribution of openDuT is present

- By either creating one yourself on your host:

cargo ci distribution - Or by downloading a release and copying to the target directory

target/ci/distribution/x86_64-unknown-linux-gnu/

- By either creating one yourself on your host:

-

Login to the virtual machine from your host (assumes you have already set up the virtual machine)

cargo theo vagrant ssh -

Start test environment in opendut-vm:

cargo theo testenv start -

Start a cluster in opendut-vm:

cargo theo testenv cluster startThis will start several EDGAR containers and create an OpenDuT cluster.

Use virtual machine for development

This mode is used to test a debug or development builds of OpenDuT. Instead of running CARL in docker, a proxy is used to forward requests to CARL running on the host.

Prepare the test environment to run in development mode:

- Start vagrant on host:

cargo theo vagrant up - Connect to virtual machine from host:

cargo theo vagrant ssh - Start developer test mode in opendut-vm:

cargo theo dev start - Update

/etc/hostson host to resolve addresses to the virtual machine, see [setup].

Use a different command to start the applications configured for the test environment:

-

Run CARL on the host:

cargo theo dev carl -

Run LEA on the host:

cargo lea -

Run EDGAR on the machine that you started the test environment:

cargo theo dev edgar-shell -

Use either distribution build or debug build of EDGAR:

cat create_edgar_service.sh # to see how to start EDGAR step-by-step and how to use CLEO to manage devices and peers ./create_edgar_service.sh # to start distribution build of EDGAR # notes for manual start root@338b6f728a0b:/opt# type -a opendut-edgar opendut-edgar is /usr/local/opendut/bin/distribution/opendut-edgar # extracted distribution build root@338b6f728a0b:/opt# type -a opendut-cleo opendut-cleo is /usr/local/opendut/bin/distribution/opendut-cleo # distribution build opendut-cleo is /usr/local/opendut/bin/debug/opendut-cleo # debug build mounted as volume from host

Use CLEO

When using CLEO in your IDE or generally on the host, the address for keycloak needs to be overridden, as well as the address for CARL.

# Environment variables to use CARL on host

export OPENDUT_CLEO_NETWORK_CARL_HOST=localhost

export OPENDUT_CLEO_NETWORK_CARL_PORT=8080

# Environment variable to use keycloak in test environment

export OPENDUT_CLEO_NETWORK_OIDC_CLIENT_ISSUER_URL=http://localhost:8081/realms/opendut/

cargo cleo list peers

Run OpenDuT integration tests

There are some tests that depend on third-party software. These tests require the test environment to be running and reachable from the machine where the tests are executed.

-

Start the test environment:

cargo theo vagrant ssh cargo theo testenv start --skip-telemetry -

Run the tests that depend on the test environment:

export OPENDUT_RUN_KEYCLOAK_INTEGRATION_TESTS=true export OPENDUT_RUN_NETBIRD_INTEGRATION_TESTS=true cargo ci check # or explicitly run specific tests only cargo test --package opendut-auth-tests client::test_confidential_client_get_token --all-features -- cargo test --package opendut-auth-tests --all-features -- --nocapture cargo test --package opendut-vpn-netbird client::integration_tests --all-features -- --nocapture

Known Issues

Copying data to and from the OpenDuT Browser

The OpenDuT Browser is a web browser running in a docker container. It is based on KasmVNC base image which allows containerized desktop applications from a web browser. When using the OpenDuT Browser, you may want to copy data to and from the OpenDuT browser inside your own browser. On Firefox this is restricted, and you may use the clipboard window on the left side of the OpenDuT Browser to copy data to your clipboard.

Cargo Target Directory

When running cargo tasks within the virtual machine, you may see following error:

warning: hard linking files in the incremental compilation cache failed. copying files instead. consider moving the cache directory to a file system which supports hard linking in session dir

This is mitigated by setting a different target directory for cargo in /home/vagrant/.bashrc on the virtual machine:

export CARGO_TARGET_DIR=$HOME/rust-target

Vagrant Permission Denied

Sometimes vagrant fails to insert the private key that was automatically generated. This might cause this error (seen in git-bash on Windows):

$ vagrant ssh

vagrant@127.0.0.1: Permission denied (publickey).

This can be fixed by overwriting the vagrant-generated key with the one inserted during provisioning:

cp ~/.ssh/id_rsa .vagrant/machines/opendut-vm/virtualbox/private_key

Vagrant Timeout

If the virtual machine is not allowed to create or use a private network it may cause a timeout during booting the virtual machine.

Timed out while waiting for the machine to boot. This means that

Vagrant was unable to communicate with the guest machine within

the configured ("config.vm.boot_timeout" value) time period.

- You may disable the private network by setting the environment variable

OPENDUT_DISABLE_PRIVATE_NETWORK=trueand explicitly halt and start the virtual machine again.

export OPENDUT_DISABLE_PRIVATE_NETWORK=true

vagrant halt

vagrant up

Vagrant Custom Certificate Authority

When running behind an intercepting http proxy, you may run into issues with SSL certificate verification.

ssl.SSLCertVerificationError: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self-signed certificate in certificate chain (_ssl.c:1007)

This can be mitigated by adding the custom certificate authority to the trust store of the virtual machine.

- Place certificate authority file here:

resources/development/tls/custom-ca.crt - And re-run the provisioning of the virtual machine.

export CUSTOM_ROOT_CA=resources/development/tls/custom-ca.pem

vagrant provision

Ctrl+C in Vagrant SSH

When using cargo theo vagrant ssh on Windows and pressing Ctrl+C to terminate a command, the ssh session may be closed.

Netbird management invalid credentials

If keycloak was re-provisioned after the netbird management server, the management server may not be able to authenticate with keycloak anymore.

# docker logs netbird-management-1

[...]

2024-02-14T09:51:57Z WARN management/server/account.go:1174: user 59896d1b-45e6-48bb-ae79-aa17d5a2af94 not found in IDP

2024-02-14T09:51:57Z ERRO management/server/http/middleware/access_control.go:46: failed to get user from claims: failed to get account with token claims user 59896d1b-45e6-48bb-ae79-aa17d5a2af94 not found in the IdP

docker logs edgar-leader

[...]

Failed to create new peer.

[...]

Received status code indicating an error: HTTP status client error (403 Forbidden) for url (http://netbird-management/api/groups)

This may be fixed by destroying the netbird service:

cargo theo testenv destroy

Afterward you may restart the netbird service:

cargo theo testenv start

# or

cargo theo dev start

No space left on device

Error writing to file - write (28: No space left on device)

You may try to free up space on the virtual machine by (preferred order):

- Cleaning up the cargo target directory:

cargo clean ls -l $CARGO_TARGET_DIR - removing old docker images and containers:

docker system prune --all # and eventually with volumes docker system prune --all --volumes

Advanced Usage

Use vagrant directly

Run vagrant commands directly instead of through THEO:

- or directly via Vagrant's cli (bash commands run from the root of the repository):

export OPENDUT_REPO_ROOT=$(git rev-parse --show-toplevel) export VAGRANT_DOTFILE_PATH=$OPENDUT_REPO_ROOT/.vagrant export VAGRANT_VAGRANTFILE=$OPENDUT_REPO_ROOT/.ci/deploy/opendut-vm/Vagrantfile vagrant up - provision vagrant with desktop environment

ANSIBLE_SKIP_TAGS="" vagrant provision

Re-provision the virtual machine

This is recommended after potentially breaking changes to the virtual machine.

- Following command will re-run the ansible playbook to re-provision the virtual machine. Run from host:

cargo theo vagrant provision

- Destroy test environment and re-create it, run within the virtual machine:

cargo theo vagrant ssh

cargo theo testenv destroy

cargo theo testenv start

Cross compile THEO for Windows on Linux

cross build --release --target x86_64-pc-windows-gnu --bin opendut-theo

# will place binary here

target/x86_64-pc-windows-gnu/release/opendut-theo.exe

Proxy configuration

In case you are working behind an http proxy, you need additional steps to get the test environment up and running.

The following steps pick up just before you start up the virtual machine with vagrant up.

A list of all domains used by the test environment is reflected in the Vagrantfile, see field vm_default_no_proxy in .ci/deploy/opendut-vm/Vagrantfile.

It is important to note that the proxy address has to be accessible from the host while provisioning the virtual machine and within the virtual machine.

If you have a proxy server on your localhost you need to make this in two steps:

- Use proxy on your localhost

- Start the VM without provisioning it.

This should create the vagrant network interface with network range 192.168.56.0/24.

export HTTP_PROXY=http://localhost:3128 export HTTPS_PROXY=http://localhost:3128 vagrant up --no-provision

- Start the VM without provisioning it.

This should create the vagrant network interface with network range 192.168.56.0/24.

- Use proxy on private network address 192.168.56.1

- Install proxy plugin for vagrant

vagrant plugin install vagrant-proxyconf - Configure vagrant to use the proxy localhost by creating a

.envfile in the root of the repository with the following content:OPENDUT_VM_HTTP_PROXY=http://192.168.56.1:3128 OPENDUT_VM_HTTPS_PROXY=http://192.168.56.1:3128 #OPENDUT_VM_NO_PROXY=my-corporate-opendut-test-domain.local <-- optional, if you want to test other domains - Make sure this address is allowing access to the internet:

curl --max-time 2 --connect-timeout 1 --proxy http://192.168.56.1:3128 google.de - Reapply the configuration to the VM

$ vagrant up --provision Bringing machine 'opendut-vm' up with 'virtualbox' provider... ==> opendut-vm: Configuring proxy for Apt... ==> opendut-vm: Configuring proxy for Docker... ==> opendut-vm: Configuring proxy environment variables... ==> opendut-vm: Configuring proxy for Git...

- Install proxy plugin for vagrant

Custom root certificate authority

This section shall provide information on how to

provision the virtual machine when running behind an intercepting http proxy.

This is also used in the docker containers to trust the custom certificate authority.

All certificate authorities matching the following path will be trusted in the docker container:

./resources/development/tls/*-ca.pem.

The following steps need to be done before provisioning the virtual machine.

- Place certificate authority file here:

resources/development/tls/custom-ca.crt - Optionally, disable private network definition of vagrant, if this causes errors.

export CUSTOM_ROOT_CA=resources/development/tls/custom-ca.pem

export OPENDUT_DISABLE_PRIVATE_NETWORK=true # optional

vagrant provision

Give the virtual machine more CPU cores and more memory

In case you want to build the project you may want to assign more CPU cores, more memory or more disk to your virtual machine.

Just add the following environment variables to the .env file and reboot the virtual machine.

- Configure more memory and/or CPUs:

OPENDUT_VM_MEMORY=32768 OPENDUT_VM_CPUS=8 cargo theo vagrant halt cargo theo vagrant up - Configure more disk space:

- Most of the time you may want to clean up the cargo target directory inside the

opendut-vmif you run out of disk space:

cargo clean # should clean out target directory in ~/rust-target- If this is still not enough you can install the vagrant disk size plugin

vagrant plugin install vagrant-disksize- add the following environment variable:

OPENDUT_VM_DISK_SIZE=80- and reboot the virtual machine to have more disk space unlocked.

- Most of the time you may want to clean up the cargo target directory inside the

Custom NTP server for the opendut virtual machine

The current time in the virtual machine is wrong and timesync won't happen with the default ntp server ntp.ubuntu.com.

- Just add the following environment variables to the

.envfile:

OPENDUT_VM_NTP_SERVER=time.yourcorp.com

- And provision the virtual machine:

cargo theo vagrant provision

Test a different version of openDuT components

By default, the test environment expects a distribution of openDuT components that matches the version of the repository you are working on.

If you want to test another version of openDuT components, you can set the following environment variables in the .env file:

OPENDUT_CARL_IMAGE_VERSION=0.9.0-alpha

This will make the test environment load the specified version of the CARL distribution.

Make sure that the specified version is already built or downloaded here: target/ci/distribution/x86_64-unknown-linux-gnu.

If you use this feature, a warning will be printed when starting the test environment.

Load custom configuration in localenv and testenv

By default, the localenv is loaded with the following configuration files:

- Environment variables:

./.ci/deploy/localenv/.env.development - Docker compose file:

./.ci/deploy/localenv/docker-compose.yml

The localenv can be customized by creating the following files:

- Environment variables:

./.ci/deploy/localenv/.env.override - Docker compose file:

./.ci/deploy/localenv/docker-compose.override.yml

EDGAR Testenv cluster

The testenv cluster also supports custom configuration:

- Docker compose file:

.ci/deploy/testenv/edgar/docker-compose.yml - Override file location:

.ci/deploy/testenv/edgar/docker-compose.override.yml

Enable client authentication

To enable client authentication in localenv and testenv:

- Copy the following files:

cp .ci/deploy/localenv/docker-compose.override.mtls.yml ./.ci/deploy/localenv/docker-compose.override.yml cp .ci/deploy/testenv/edgar/docker-compose.override.mtls.yml .ci/deploy/testenv/edgar/docker-compose.override.yml - And restart the localenv.

Secrets for test environment

This repository contains secrets for testing purposes. These secrets are not supposed to be used in a production environment. There are two formats defined in the repository that document their location:

- ~/.gitguardian.yml

- .secretscanner-false-positives.json

Alternative strategy to avoid this: auto-generate secrets during test environment setup.

GitGuardian

Getting started with ggshield

- Install ggshield

sudo apt install -y python3-pip pip install ggshield export PATH=~/.local/bin/:$PATH - Login to https://dashboard.gitguardian.com

- Either use PAT or service account (https://docs.gitguardian.com/api-docs/service-accounts)

- Goto API -> Personal access tokens

- and create a token

- Use API token to login:

ggshield auth login --method token

Scan repository

-

See https://docs.gitguardian.com/ggshield-docs/getting-started

-

Scan repo

ggshield secret scan repo ./ -

Ignore secrets found in last run and remove them or document them in

.gitguardian.ymlggshield secret ignore --last-found -

Review changes in

.gitguardian.ymland commit

Release

Learn how to create releases of openDuT.

Publishing a release

This is a checklist for the steps to take to create a release for public usage.

- Ensure the changelog is up-to-date.

- Change top-most changelog heading from "Unreleased" to the new version number and add the current date.

-

Increment version number in workspace

Cargo.toml. -

Run

cargo ci checkto update allCargo.lockfiles. -

Increment the version of the CARL container used in CI/CD deployments (in the

.ci/folder). -

Create commit and push to

development. -

Open PR from

developmenttomain. - Merge PR once its checks have succeeded.

-

Tag the last commit on

mainwith the respective version number in the format "v1.2.3" and push the tag.

After the release

-

Increment version number in workspace

Cargo.tomlto development version, e.g. "1.2.3-alpha". -

Run

cargo ci checkto update allCargo.lockfiles. - Add a new heading "Unreleased" to the changelog with contents "tbd.".

-

Create commit and push to

development. - Announce the release on the mailing list and in the chat.

Manually Building a Release

To build release artifacts for distribution, run:

cargo ci distribution

The artifacts are placed under target/ci/distribution/.

To build a docker container of CARL and push it to the configured docker registry:

cargo ci carl docker --publish

This will publish opendut-carl to ghcr.io/eclipse-opendut/opendut-carl:x.y.z.

The version defined in opendut-carl/Cargo.toml is used as docker tag by default.

Alternative platform

If you want to build artifacts for a different platform, use the following:

cargo ci distribution --target armv7-unknown-linux-gnueabihf

The currently supported target platforms are:

- x86_64-unknown-linux-gnu

- armv7-unknown-linux-gnueabihf

- aarch64-unknown-linux-gnu

Alternative docker registry

Publish docker container to another container registry than ghcr.io.

export OPENDUT_DOCKER_IMAGE_HOST=other-registry.example.net

export OPENDUT_DOCKER_IMAGE_NAMESPACE=opendut

cargo ci carl docker --publish --tag 0.1.1

This will publish opendut-carl to 'other-registry.example.net/opendut:opendut-carl:0.1.1'.

CARL gRPC API

Here is an example script showing how use the gRPC API of CARL with authentication and TLS:

#!/bin/bash

# To use this script, provide a .env file in the working directory with:

# SSL_CERT_FILE=<PATH>

# OPENDUT_REPO_ROOT=<PATH>

# OPENDUT_CLEO_NETWORK_OIDC_CLIENT_SECRET=<SECRET>

#

# The $OPENDUT_CLEO_NETWORK_OIDC_CLIENT_SECRET can be found in .ci/deploy/localenv/data/secrets/.env under the openDuT repository on the server hosting CARL.

# The CA certificate can be found in .ci/deploy/localenv/data/secrets/pki/opendut-ca.pem. Place this in the $SSL_CERT_FILE path.

# Additionally clone the openDuT repository to your PC into the path $OPENDUT_REPO_ROOT.

# You also have to install the `grpcurl` utility.

set -euo pipefail

set -a # exports all envs

source .env

set +a